Chapter 6. The European Union’s results, evaluation and learning

Management for development results

The European Union has made significant progress in establishing comprehensive results-based management frameworks. At the same time, the systems could be consolidated and harmonised across the different European Union institutions as well as making better use of the results information for learning, programming, and strategic planning.

A new comprehensive management framework for development results

Over the past three years, the European Union (EU) has introduced a comprehensive approach to management systems for development results, including though providing strong support for developing country partners to collect better statistics. Furthermore, in 2017, the new European Consensus on Development (Council of the European Union, 2017) committed EU actors and member states to align results frameworks to the 2030 Agenda for Sustainable Development, prioritising use of country-based results frameworks and Sustainable Development Goal (SDG) indicators.

The 2012 DAC peer review encouraged the EU to increase its attention to results, particularly by ensuring a focus on impact and using the information to learn lessons for improved performance over and above financial accountability. In this context, and as part of the implementation of its Agenda for Change (European Commission, 2011), the EU reformed its approach to results by introducing a new results framework to improve monitoring and reporting, as well as to enhance accountability, transparency and visibility of the EU’s development co-operation.

The International Cooperation and Development Results Framework (EURF) was first introduced by the Directorate-General for International Cooperation and Development (DG DEVCO) in 2015, then adopted by Directorate-General for European Neighbourhood Policy and Enlargement Negotiations (DG NEAR) for the EU’s neighbourhood countries. The Directorate-General for European Civil Protection and Humanitarian Aid Operations (DG ECHO) is currently developing its own results framework that will give an overview of its achievements and internal processes in the context of humanitarian needs and risks on global, regional and country levels.

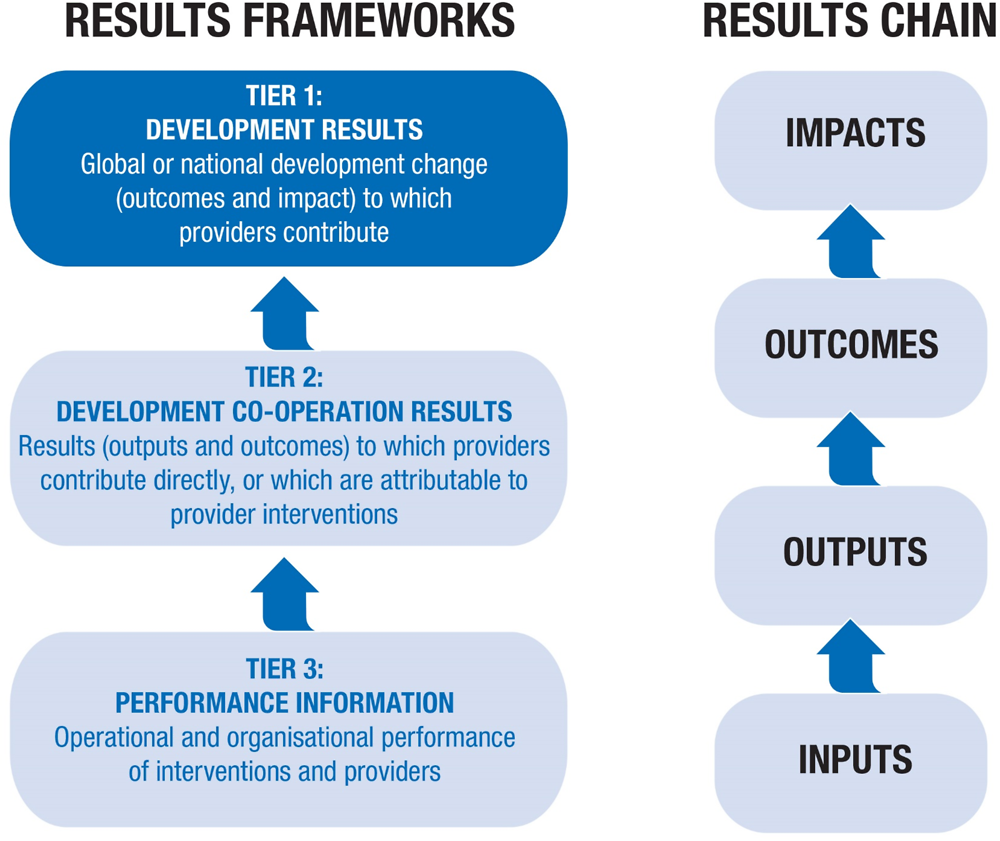

The EURF is articulated around three levels, in line with the results frameworks of the World Bank and some other bilateral and multilateral partners, as shown in Figure 6.1. Within this system, Level 1 comprises development outcomes/impact on SDGs in partner countries, such as prevalence of stunting, to which EU and other stakeholders contribute. Level 2 covers outputs and direct outcomes from EU-financed interventions (such as the number of kilometres of transmission lines), with identification of indicators based on partner country criteria and, where possible, on indicators used by other donors and that should also be linked to the SDGs.1 Level 3 is organisational performance related to EU operational processes (such as the percentage of invoices paid within 30 days). To facilitate results reporting for EURF at headquarters and in the field, including by partners, the Commission has established a new online operational information system, OPSYS. In addition, to assist EU delegations to focus on results management, the Commission continues to contract experts for the Results-Oriented Monitoring (ROM) system, which provides troubleshooting, assistance for input provision, monitoring of outputs and outcomes, and training in results-based management, among other activities.

The European Investment Bank (EIB) manages development results based on the 2012 results measurement (ReM) framework, which is harmonised with other multilateral development banks. The framework covers three pillars. The first is consistency with the EIB’s mandate and contributions to EU development priorities and partner countries’ development objectives. The second involves results of EIB interventions that contribute to the SDGs and indicators agreed with partner countries (e.g. the number of jobs created). The third is financial additionality in filling the local market gap, which can be provided through a financial contribution that is assessed in comparison with commercial alternatives, for example.

In terms of process, during project appraisal, the EIB sets targets for expected outputs and outcomes based on baselines. During the period of implementation, projects are rated according to the above three pillars. Upon project completion, performance is reviewed immediately and again three years later (EIB, 2017). Various themes such as gender consideration are also incorporated into the results framework. It would be useful for the EIB to discuss and share its work on development results with broader communities of practice that are considering how to improve assessment tools to measure the development impact of blended finance and other related initiatives.

Regarding strengthening countries’ own capacity to manage results through better statistics, the EU takes a leading role among donors. On aid for statistics, the Commission is the second biggest provider among DAC members, as well as the largest donor to small island developing states and to fragile states for such aid. In collaboration with Eurostat, it provides various types of support for statistics: national or regional statistical systems (e.g. EU-ASEAN Capacity Building Project); large-scale operations such as a population census; and sector statistics in education, health, and others (PARIS21, 2017).

The Commission’s budget support has a different results framework

The EU’s budget support programmes represent another results and performance-related system in that funds are disbursed only when agreed targets, which are aligned with partner countries’ policy priorities, are met. For general budget support, indicators generally relate to macroeconomic management (e.g. government gross debt as a percentage of GDP), domestic resource mobilisation (e.g. revenue as a share of GDP), public financial management (e.g. the Open Budget Index) and poverty reduction (e.g. a headcount of poor people).

Results for sector budget support are more specifically related to the particular area involved. For example, three sectoral budget support programmes in Bolivia focus on water and sanitation, agriculture, and anti-narcotics, with indicators aligned with the related national priorities and SDGs. Examples of such indicators include the percentage of the population connected to sewage systems (which relates to SDG 6.2); national coverage of comprehensive and free justice services (SDG 16.3); and quantity of seized drugs (SDG 16.4). In some cases, some of the indicators agreed with the government included easily deliverable outputs rather than outcomes, in order to release the funds (Annex D). Following each disbursement for budget support, the EU delegation prepares a brief report for submission to headquarters in order to assess the results achieved and to draw lessons for future contracts. As these results are tailored to specific countries, however, they are not part of the EU International Cooperation and Development Results Framework (EURF) and are not recorded in OPSYS. Therefore, it is not clear how the results of the budget support programmes are aggregated at headquarters and used for learning and policy making.

Building on progress, further consolidation and harmonisation of the results framework could be pursued across the EU actors

At country level, EU actors have made significant progress on results management in recent years, establishing adequate results frameworks that facilitate target setting and providing incentives to achieve goals based on the SDGs and country priorities. In doing so, the EU has addressed the 2012 peer review recommendation to link objectives of activities with country strategies and to make monitoring regular and helpful to delegations. Furthermore, the data collected via OPSYS throughout the world will be used for the results management and evaluation functions at centralised and decentralised levels.

At the corporate level, however, it is not obvious how all the information collected at headquarters (particularly for Level 2 and EIB results) contributes either to defining overall results and trends or to drawing out common factors in success or failure - a disconnect that was also observed in the 2012 peer review. In particular, while there is no claim of attribution in achieving Level 1 outcomes, the basis for judging whether Level 2 outputs are satisfactory or not at the corporate level is unclear. Furthermore, due to the lack of benchmarks, it is difficult to determine value for money and to translate results into substantive policy making.

Therefore, aside from reporting for accountability and communication purposes, the EU could make better use of its results information for learning, programming and strategic planning. In this context, the EU actors could possibly learn from the experience of multilateral development banks and other DAC members in order to explore establishing a system to better utilise the results information gathered (OECD, 2017).

Moreover, there is scope to harmonise indicators and further consolidate reports to increase coherence and reduce the administrative burden.2 In other words, the EU would be well-served by a comprehensive approach to results-based management - and this applies to the various instruments used by DG DEVCO, DG NEAR, DG ECHO, the EIB and others engaged in development co-operation, particularly as they often work in the same partner countries, themes and sectors. At the same time, to guard against too rigid an approach, the EU could look to ensure that template-based approaches to results-based management have scope for flexibility and adaptability particularly in fragile contexts. In refining the architecture of its corporate results framework, the EU could consider articulating a stronger narrative and analysis of the contribution of the EU’s results to country level outcomes which are aligned to the SDGs (OECD, 2017).

Evaluation system

The European Union is refining its system for evaluation, which uses the DAC’s criteria, by establishing new guidelines, increasing joint evaluations and maximising usefulness. At the same time, the trade-offs of carrying out a participatory approach versus maintaining independence of evaluation results could be discussed further.

The Commission has new guidelines on evaluation but could further explore trade-offs in more independence versus buy-in

In 2013, the Commission established the principle of “evaluate first” for its overall evaluation system including for development co-operation (European Commission, 2013). This was refined in 2014 and summed up as “evaluation matters” in a set of overall principles governing the evaluation of the EU’s development co-operation (European External Action Service, 2014). The following year, the Better Regulation package spelled out guidelines on providing evidence for decision making.3 Based on these directives, the DG DEVCO and DG NEAR carry out evaluations - at two levels: centralised strategic evaluations by headquarters and decentralised evaluations by delegations. These use the DAC criteria of relevance, effectiveness, efficiency, impact and sustainability.

The centralised evaluations follow a five-year rolling programme that covers geographic evaluations of four to five countries or regions per year and thematic evaluations on topics such as resilience, conflict prevention, migration and governance. Geographic evaluations are selected based on financial coverage, regularity and regional distribution, with special attention to fragility-affected and conflict-affected countries. The choice of thematic evaluations is based on wide internal consultations with thematic and policy directorates. However, the bulk of evaluations are decentralised and carried out by delegations or operational units. They cover small projects to large facilities; projects above EUR 5 million and at least 50% of a multiannual programme of the country or unit must be included. The provisions for these decentralised evaluation are included in the costs of the programmes and projects. Until recently, the results of these evaluations generally stayed at the delegations but they are now uploaded on to the EVAL Module IT repository system4 to be shared with headquarters and other delegations. Nonetheless, how these decentralised evaluations are used for learning and future programming is unclear.

The respective evaluation units of DG DEVCO and DG NEAR are responsible for steering the evaluations. This includes co-ordinating among member states and particularly, as the 2012 peer review noted, to promote joint evaluations for joint programming. Evaluations, which generally use the information collected on results, are contracted out to independent consultants who have framework agreements through public procurement at headquarters or delegations. To carry out the tasks, DG DEVCO has seven evaluation managers in the evaluation unit and a budget of roughly EUR 3.6 million a year, while DG NEAR has four evaluation managers and a budget of roughly EUR 2.0 million a year.

To help operational managers to prepare, carry out and disseminate evaluations, the Evaluation Correspondents’ Network was set up in 2013, which is composed of about 120 staff designated across DEVCO services and delegations. A similar network has been created by DG NEAR in 2015, including about 50 staff at headquarters and in EU delegations.

In DG DEVCO, when evaluations are finalised, they are submitted to the Inter-Service Group for comments, the heads of the evaluation units for approval and the relevant Commissioner for no-objection. The recommendations are made to the thematic services, which then express agreement, partial agreement or disagreement and propose actions to be taken by management. These actions are followed up one year later, with reporting to the Director General (EEAS, 2014).

In DG NEAR the final evaluation reports are validated by the relevant Inter-Service Group. For each evaluation a Follow-up Action Plan is drafted including the list of recommendations, which can be accepted, partially accepted or rejected, with foreseen actions to be implemented by Commission services. The Follow-up Action Plan is approved by the Director General of DG NEAR before publication on the NEAR’s public web-site, with implementation to be followed up one year later.

The EIB’s Operations Evaluation Department carries out independent evaluations of the Bank’s activities, mainly at a thematic level or the geographical level (usually by region or subregion). The evaluation criteria follow principles defined by the DAC Evaluation Network and adopted by the Evaluation Co-operation Group5 of the multilateral development banks, with a focus on operational performance, accountability and transparency. The evaluation reports are approved by the EIB Board of Directors rather than Bank management, which guarantees the independence of the evaluations (EIB, 2015).

The 2012 DAC peer review raised concerns about the independence of the evaluations of the Commission because they were not being submitted directly to senior management. The Commission has since adopted a more participatory approach, involving staff in the needs assessment of evaluations, the development of terms of reference, as well as discussing the recommendations in order to maximise the value and buy-in of evaluations. While no formal incentives are in place, staff are also encouraged to use evaluation findings to improve programming. In addition, the timing of evaluations is adjusted to feed into the development of country strategies and to reinforce course correction and decision making during ongoing projects.

Given that a number of other DAC members such as Sweden and the United States also embrace a participatory approach to evaluation, an exchange of experience could help stimulate further progress in this area. This is particularly so regarding learning around the trade-offs involved in opting for more independent evaluations versus the benefits of increasing buy-in among operational staff. For example, some donors have developed hybrid models ensuring no conflict of interest or requiring external evaluators to limit bias and increase potential for learning from evaluations.

Institutional learning

The European Union is using evaluations as a basis for learning. It also has various tools and platforms for knowledge sharing. However, more effort is needed to overcome fragmentation among European Union institutions and to influence policies.

Knowledge sharing mechanisms have multiplied, but there is limited evidence of how learning is used

The DAC’s 2012 review found that the EU’s evaluation findings were used in a limited manner and with minimal sharing of results. As a result, the EU has increased evaluation dissemination efforts by encouraging evaluation managers to ensure good knowledge translation (i.e. interpreting and distilling the outcomes of the evaluation in a way tailored to specific user audiences) and by increasing co-ordination between DG DEVCO and the European External Action Service (EEAS) to improve the uptake of evaluation results.

For each evaluation, the responsible evaluation manager now systematically prepares a plan for communication and follow-up. Key operational staff are closely involved with the process, based on the logic that they are best placed to ensure recommendations are able to be implemented. The communication plan covers the audience (key users and stakeholders); channels (i.e. e-mails, various platforms, social media, and seminars); and reporting formats (i.e. summary, management brief, video, etc.). To promote awareness of the conclusions, public seminars also are held systematically in Brussels, with representatives of the European Parliament and member states (through the Council) always invited, and in partner countries.

Aside from learning from evaluations, DG DEVCO adopted its first Learning and Knowledge Development Strategy for 2014-20 and an action plan in 2014. Topics covered include alignment with the SDGs, organisational processes and IT applications, which are offered through online or group courses tailored to particular target audiences. The strategy and the plan also introduce a number of platforms such as the Evaluation Network Group, Learn4dev, Cap4dev, DEVCO Academy, European Expert Network on International Cooperation and Development and the Network on Knowledge Management Correspondence.

Staff in the field who are dealing with day-to-day operational challenges may not necessarily use these platforms, however. For example, staff in the field preferred and appreciated the seminar-based learning opportunities on specific topics in Brussels to share relevant knowledge among delegations. In this respect, the Commission is encouraged to gather feedback from the field on the most time-efficient and effective ways of learning and knowledge sharing.

More effort is needed to influence policies, overcome fragmentation of learning and communicate to the public

Directorates and institutions apart from DG DEVCO have their own systems for sharing knowledge and lessons learned, although with different levels of comprehensiveness and visibility. DG ECHO has an active system for knowledge management with a dedicated partners’ website that provides links to various training sessions and distance learning tools for non-governmental organisations (NGOs).6 Its Civil Protection Mechanism also offers a comprehensive training programme and an exchange platform for European experts to learn about the different national systems for emergency intervention and civil protection as well as how to improve co-ordination and assessment in disaster response.

Other directorates such as DG NEAR disseminate reports on monitoring and evaluation for internal learning by organising results seminars and thematic discussions. The European Investment Bank shares lessons learned from evaluations and offers staff and researchers training, online courses, podcasts and studies. However, both DG NEAR and the EIB have yet to develop a clear strategy or framework for knowledge sharing and institutional learning in development co-operation.

In general, DG DEVCO in particular has made an effort to promote knowledge management and learning, with lessons used to inform policies and programmes at the field level. But there may be more emphasis on learning about operational procedures than about factors that contribute to outcomes and impact. In addition, it is not evident how lessons from projects and evaluations at decentralised levels are systematically aggregated to inform staff across the organisation and impart common successes and challenges to policy makers. Finally, learning is still fragmented across different directorates and lacks an institutional, whole-of-EU approach. Improvements could thus be made in analysing impact, influencing policies and consolidating learning across EU actors.

Communication to tailor messages from the results framework and evaluations to policy makers and the public could also be enhanced, as already noted in the 2012 peer review. In particular, while evaluations of DG DEVCO are uploaded on the EVAL Module IT repository system for internal use, they are not yet accessible to the public. A more open knowledge management culture that involves communicating to the public could be considered. Overall, in order to enhance coherence, communication, accountability and transparency, the EU could consider how it might communicate its development co-operation as a whole, including through the efforts of EIB and other relevant parts of the EU’s development co-operation system.

Bibliography

Government sources

European Commission (2018a), “2017 annual report on the implementation of the European Union's instruments for financing external actions in 2016”, Commission Staff Working Document, No. SWD(2018) 64 final, European Commission, https://ec.europa.eu/europeaid/sites/devco/files /2017-swd-annual-report_en.pdf.

European Commission (2018b), International Cooperation and Development, Measuring results (website), https://ec.europa.eu/europeaid/devcos-results-framework_en (accessed 23 July 2018).

European Commission (2018c), Budget Support: Trends and Results 2017, European Union, https://ec.europa.eu/europeaid/sites/devco/files/budget-support-trends-results-2017_en.pdf.

European Commission (2018d), Capacity4development (website), European Commission, https://europa.eu/capacity4dev/ (accessed on 23 July 2018).

European Commission (2017a), Budget focused on results (website), http://ec.europa.eu/budget/budget4results/index_en.cfm (accessed 23 July 2018).

European Commission (2017b), International Cooperation and Development, Using our experience to improve the quality of our development engagement (website), https://ec.europa.eu/europeaid/ using-our-experience-improve-quality-our-development-engagement_en (accessed 23 July 2018).

European Commission (2017c), EU International Cooperation and Development: First report on selected results, July 2013 - June 2014, European Union, https://ec.europa.eu/europeaid/sites/ devco/files/eu-results-report_2013-2014_en.pdf.

European Commission (2017d), NEAR Strategic Evaluation Plan 2018-2022, European Commission, https://ec.europa.eu/neighbourhood-enlargement/sites/near/files/near_multinannual_strategic_ evaluation_plan_2018-2022_01122017.pdf.

European Commission (2017e), ECHO, Accountability, annual reports (website), http://ec.europa.eu/echo/who/accountability/annual-reports_en (accessed 23 July 2018).

European Commission (2016a), European Neighbourhood Policy and Enlargement Negotiations, Monitoring and evaluation (website), https://ec.europa.eu/neighbourhood-enlargement/tenders /monitoring-and-evaluation_en (accessed 23 July 2018).

European Commission (2016b), “Proposal for a new European consensus on development - ‘Our World, Our Dignity, Our Future’”, COM(2016) 740 final, https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=COM:2016:740:FIN.

European Commission (2015a), “Better regulation guidelines, Commission Staff Working Document (2015) 111 final, European Commission, http://ec.europa.eu/smart-regulation/guidelines/docs/ swd_br_guidelines_en.pdf.

European Commission (2015b), “Launching the EU International Cooperation and Development Results Framework”, Commission Staff Working Document, No. SWD(2015) 80 final, European Commission, https://ec.europa.eu/europeaid/sites/devco/files/swd-2015-80-f1-staff-working-paper-v3-p1-805238_en_0.pdf.

European Commission (2015c), “Towards the World Humanitarian Summit: A global partnership for principled and effective humanitarian action”, Commission Staff Working Document, No. COM(2015) 419 final, European Commission, https://reliefweb.int/sites/reliefweb.int/files/ resources/COM-2015-419-FIN-EN-TXT.pdf.

European Commission (2014), Learning and Knowledge Development Strategy (LKDS) 2014-2020, European Commission, https://europa.eu/capacity4dev/km-ks/blog/learning-and-knowledge-development-strategy-europeaid.

European Commission (2013), “Strengthening the foundations of Smart Regulation - improving evaluation”, COM(2013) 686 final, European Commission, http://ec.europa.eu/smart-regulation /docs/com_2013_686_en.pdf.

European Commission (2011), “Increasing the impact of EU development policy: An agenda for change”, COM(2011) 637 final, https://ec.europa.eu/europeaid/sites/devco/files/publication-agenda-for-change-2011_en.pdf.

European External Action Service (2014), Evaluation Matters - The Evaluation Policy for European Union Development Cooperation, European Commission, https://ec.europa.eu/europeaid/sites/ devco/files/evaluation-matters_en_0.pdf.

European Investment Bank (2018a), Annual Report 2017 on EIB Activity in Africa, the Caribbean and the Pacific, and the Overseas Countries and Territories, European Investment Bank, Kirchberg, Luxembourg, http://www.eib.org/attachments/country/if_annual_report_2017_en.pdf.

European Investment Bank (2018b), Operations evaluation - thorough assessments to improve EIB performance (website), European Investment Bank, Kirchberg, Luxembourg, http://www.eib.org/en/projects/evaluation/index.htm (accessed 23 July 2018).

European Investment Bank (2018), The EIB outside the European Union in 2017, European Investment Bank, Kirchberg, Luxembourg, http://www.eib.org/attachments/country/eib _rem_annual_report_2017_en.pdf.

European Investment Bank (2017), The Results Measurement (ReM) Framework Methodology, European Investment Bank, Kirchberg, Luxembourg, http://www.eib.org/attachments/rem _framework_methodology_en.pdf.

European Investment Bank (2015), Accountable for the past, learning for the future: Evaluation at the EIB, European Investment Bank, Luxembourg, http://www.eib.org/attachments/ev/operations _evaluation_en.pdf.

UN-European Commission (2015), Operational conclusions of the 11th annual meeting of the Working Group (Financial and Administrative Framework), United Nations-European Commission, https://ec.europa.eu/europeaid/sites/devco/files/op-conclusions-11th-meeting-wg-fafa_en.pdf.

Other sources

OECD (2017), Strengthening the results chain: Synthesis of case studies of results-based management by providers, Discussion Paper, https://www.oecd.org/dac/peer-reviews/results-strengthening-results-chain-discussion-paper.pdf.

PARIS21 (2017), PRESS 2017: Partner Report on Support to Statistics, PARIS21 Secretariat, Paris, http://www.paris21.org/sites/default/files/2017-10/PRESS2017_web2.pdf.

Notes

← 1. Implementing partners now have contractual requirements to monitor and report on these results based on the framework.

← 2. The EU publishes several reports on results management each year: Annual Report on the Implementation of the EU’s Instrument for Financing External Actions, which since 2017 has incorporated results based on EURF; a joint annual report on budget support by DG DEVCO and NEAR to provide an overview of budget support operations; Annual Report on the European Union's humanitarian aid policies and implementation by DG ECHO; respective Annual Activity Report by DG DEVCO, DG NEAR and DG ECHO which details corporate achievements, management and the financial and human resource performance; Annual Report on results of EIB operations outside the EU based on the Bank’s ReM framework; and External Assistance Management Reports (EAMRs) and its Key Performance Indicators (KPIs) produced by each EU Delegation annually which provides a snapshot of the situation of EU development cooperation projects.

← 3. The Better Regulation agenda is described at http://europa.eu/rapid/press-release_IP-15-4988_en.htm.

← 4. See https://ec.europa.eu/europeaid/project-and-programme-evaluations_en.

← 5. See https://www.ecgnet.org/about-ecg.

← 6. Distance training programmes are described at http://dgecho-partners-helpdesk.eu/dl/start.