1. The technical landscape

This chapter characterises the “artificial intelligence (AI) technical landscape”, which has evolved significantly from 1950 when Alan Turing first posed the question of whether machines can think. Since 2011, breakthroughs have taken place in the subset of AI called “machine learning”, in which machines leverage statistical approaches to learn from historical data and make predictions in new situations. The maturity of machine-learning techniques, along with large datasets and increasing computational power are behind the current expansion of AI. This chapter also provides a high-level understanding of an AI system, which predicts, recommends or decides an outcome to influence the environment. In addition, it details a typical AI system lifecycle from i) design, data and models, including planning and design, data collection and processing and model building and interpretation; ii) verification and validation; iii) deployment; to iv) operation and monitoring. Lastly, this chapter proposes a research taxonomy to support policy makers.

A short history of artificial intelligence

In 1950, British mathematician Alan Turing published a paper on computing machinery and intelligence (Turing, 1950[1]) posing the question of whether machines can think. He developed a simple heuristic to test his hypothesis: could a computer have a conversation and answer questions in a way that would trick a suspicious human into thinking the computer was actually a human?1 The resulting “Turing test” is still used today. That same year, Claude Shannon proposed the creation of a machine that could be taught to play chess (Shannon, 1950[2]). The machine could be trained by using brute force or by evaluating a small set of an opponent’s strategic moves (UW, 2006[3]).

Many consider the Dartmouth Summer Research Project in the summer of 1956 as the birthplace of artificial intelligence (AI). At this workshop, the principle of AI was conceptualised by John McCarthy, Alan Newell, Arthur Samuel, Herbert Simon and Marvin Minsky. While AI research has steadily progressed over the past 60 years, the promises of early AI promoters proved to be overly optimistic. This led to an “AI winter” of reduced funding and interest in AI research during the 1970s.

New funding and interest in AI appeared with advances in computation power that became available in the 1990s (UW, 2006[3]). Figure 1.1 provides a timeline of AI’s early development.

The AI winter ended in the 1990s as computational power and data storage were advancing to the point that complex tasks were becoming feasible. In 1995, AI took a major step forward with Richard Wallace’s development of the Artificial Linguistic Internet Computer Entity that could hold basic conversations. Also in the 1990s, IBM developed a computer named Deep Blue that used a brute force approach to play against world chess champion Gary Kasparov. Deep Blue would look ahead six steps or more and could calculate 330 million positions per second (Somers, 2013[5]). In 1996, Deep Blue lost to Kasparov, but won the rematch a year later.

In 2015, Alphabet’s DeepMind launched software to play the ancient game of Go against the best players in the world. It used an artificial neural network that was trained on thousands of human amateur and professional games to learn how to play. In 2016, AlphaGo beat the world’s best player at the time, Lee Sedol, four games to one. AlphaGo’s developers then let the program play against itself using trial and error, starting from completely random play with a few simple guiding rules. The result was a program (AlphaGo Zero) that trained itself faster and was able to beat the original AlphaGo by 100 games to 0. Entirely from self-play – with no human intervention and using no historical data – AlphaGo Zero surpassed all other versions of AlphaGo in 40 days (Silver et al., 2017[6]) (Figure 1.2).

Where we are today

Over the past few years, the availability of big data, cloud computing and the associated computational and storage capacity and breakthroughs in an AI technology called “machine learning” (ML), have dramatically increased the power, availability, growth and impact of AI.

Continuing technological progress is also leading to better and cheaper sensors, which capture more-reliable data for use by AI systems. The amount of data available for AI systems continues to grow as these sensors become smaller and less expensive to deploy. The result is significant progress in many core AI research areas such as:

-

natural language processing

-

autonomous vehicles and robotics

-

computer vision

-

language learning.

Some of the most interesting AI developments are outside of computer science in fields such as health, medicine, biology and finance. In many ways, the AI transition resembles the way computers diffused from a few specialised businesses to the broader economy and society in the 1990s. It also recalls how Internet access expanded beyond multinational firms to a majority of the population in many countries in the 2000s. Economies will increasingly need sector “bilinguals”. These are people specialised in one area such as economics, biology or law, but also skilled at AI techniques such as ML. The present chapter focuses on applications that are in use or foreseeable in the short and medium term rather than possible longer-term developments such as artificial general intelligence (AGI) (Box 1.1).

Artificial narrow intelligence (ANI) or “applied” AI is designed to accomplish a specific problem-solving or reasoning task. This is the current state-of-the-art. The most advanced AI systems available today, such as Google’s AlphaGo, are still “narrow”. To some extent, they can generalise pattern recognition such as by transferring knowledge learned in the area of image recognition into speech recognition. However, the human mind is far more versatile.

Applied AI is often contrasted to a (hypothetical) AGI. In AGI, autonomous machines would become capable of general intelligent action. Like humans, they would generalise and abstract learning across different cognitive functions. AGI would have a strong associative memory and be capable of judgment and decision making. It could solve multifaceted problems, learn through reading or experience, create concepts, perceive the world and itself, invent and be creative, react to the unexpected in complex environments and anticipate. With respect to a potential AGI, views vary widely. Experts caution that discussions should be realistic in terms of time scales. They broadly agree that ANI will generate significant new opportunities, risks and challenges. They also agree that the possible advent of an AGI, perhaps sometime during the 21st century, would greatly amplify these consequences.

Source: OECD (2017[7]), OECD Digital Economy Outlook 2017, https://doi.org/10.1787/9789264276284-en.

What is AI?

There is no universally accepted definition of AI. In November 2018, the AI Group of Experts at the OECD (AIGO) set up a subgroup to develop a description of an AI system. The description aims to be understandable, technically accurate, technology-neutral and applicable to short- and long-term time horizons. It is broad enough to encompass many of the definitions of AI commonly used by the scientific, business and policy communities. As well, it informed the development of the OECD Recommendation of the Council on Artificial Intelligence (OECD, 2019[8]).

Conceptual view of an AI system

The present description of an AI system is based on the conceptual view of AI detailed in Artificial Intelligence: A Modern Approach (Russel and Norvig, 2009[9]). This view is consistent with a widely used definition of AI as “the study of the computations that make it possible to perceive, reason, and act” (Winston, 1992[10]) and with similar general definitions (Gringsjord and Govindarajulu, 2018[11]).

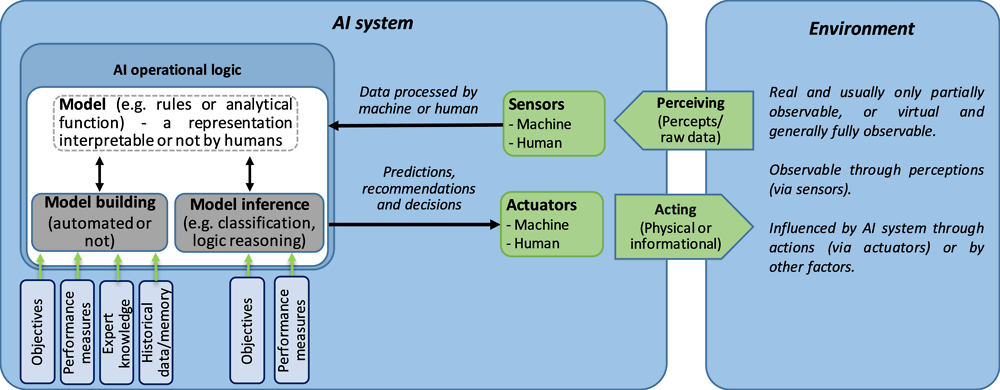

A conceptual view of AI is first presented as the high-level structure of a generic AI system (also referred to as “intelligent agent”) (Figure 1.3). An AI system consists of three main elements: sensors, operational logic and actuators. Sensors collect raw data from the environment, while actuators act to change the state of the environment. The key power of an AI system resides in its operational logic. For a given set of objectives and based on input data from sensors, the operational logic provides output for the actuators. These take the form of recommendations, predictions or decisions that can influence the state of the environment.

A more detailed structure captures the main elements relevant to the policy dimensions of AI systems (Figure 1.4). To cover different types of AI systems and different scenarios, the diagram separates the model building process (such as ML), from the model itself. Model building is also separate from the model interpretation process, which uses the model to make predictions, recommendations and decisions; actuators use these outputs to influence the environment.

Environment

An environment in relation to an AI system is a space observable through perceptions (via sensors) and influenced through actions (via actuators). Sensors and actuators are either machines or humans. Environments are either real (e.g. physical, social, mental) and usually only partially observable, or else virtual (e.g. board games) and generally fully observable.

AI system

An AI system is a machine-based system that can, for a given set of human-defined objectives, make predictions, recommendations or decisions influencing real or virtual environments. It does so by using machine and/or human-based inputs to: i) perceive real and/or virtual environments; ii) abstract such perceptions into models through analysis in an automated manner (e.g. with ML, or manually); and iii) use model inference to formulate options for information or action. AI systems are designed to operate with varying levels of autonomy.

AI model, model building and model interpretation

The core of an AI system is the AI model, a representation of all or part of the system’s external environment that describes the environment’s structure and/or dynamics. A model can be based on expert knowledge and/or data, by humans and/or by automated tools (e.g. ML algorithms). Objectives (e.g. output variables) and performance measures (e.g. accuracy, resources for training, representativeness of the dataset) guide the building process. Model inference is the process by which humans and/or automated tools derive an outcome from the model. These take the form of recommendations, predictions or decisions. Objectives and performance measures guide the execution. In some cases (e.g. deterministic rules), a model can offer a single recommendation. In other cases (e.g. probabilistic models), a model can offer a variety of recommendations. These recommendations are associated with different levels of, for instance, performance measures like level of confidence, robustness or risk. In some cases, during the interpretation process, it is possible to explain why specific recommendations are made. In other cases, explanation is almost impossible.

AI system illustrations

Credit-scoring system

A credit-scoring system illustrates a machine-based system that influences its environment (whether people are granted a loan). It makes recommendations (a credit score) for a given set of objectives (credit-worthiness). It does so by using both machine-based inputs (historical data on people’s profiles and on whether they repaid loans) and human-based inputs (a set of rules). With these two sets of inputs, the system perceives real environments (whether people are repaying loans on an ongoing basis). It abstracts such perceptions into models automatically. A credit-scoring algorithm could, for example, use a statistical model. Finally, it uses model inference (the credit-scoring algorithm) to formulate a recommendation (a credit score) of options for outcomes (providing or denying a loan).

Assistant for the visually impaired

An assistant for visually impaired people illustrates how a machine-based system influences its environment. It makes recommendations (e.g. how a visually impaired person can avoid an obstacle or cross the street) for a given set of objectives (travel from one place to another). It does so using machine and/or human-based inputs (large tagged image databases of objects, written words and even human faces) for three ends. First, it perceives images of the environment (a camera captures an image of what is in front of a person and sends it to an application). Second, it abstracts such perceptions into models automatically (object recognition algorithms that can recognise a traffic light, a car or an obstacle on the sidewalk). Third, it uses model inference to recommend options for outcomes (providing an audio description of the objects detected in the environment) so the person can decide how to act and thereby influence the environment.

AlphaGo Zero

AlphaGo Zero is an AI system that plays the board game Go better than any professional human Go players. The board game’s environment is virtual and fully observable. Game positions are constrained by the objectives and the rules of the game. AlphaGo Zero is a system that uses both human-based inputs (the rules of Go) and machine-based inputs (learning based on playing iteratively against itself, starting from completely random play). It abstracts the data into a (stochastic) model of actions (“moves” in the game) trained via so-called reinforcement learning. Finally, it uses the model to propose a new move based on the state of play.

Autonomous driving system

Autonomous driving systems illustrate a machine-based system that can influence its environment (whether a car accelerates, decelerates or turns). It makes predictions (whether an object or a sign is an obstacle or an instruction) and/or makes decisions (accelerating, braking, etc.) for a given set of objectives (going from point A to B safely in the least time possible). It does so by using both machine-based inputs (historical driving data) and human-based inputs (a set of driving rules). These inputs are used to create a model of the car and its environment. In this way, it will allow the system to achieve three goals. First, it can perceive real environments (through sensors such as cameras and sonars). Second, it can abstract such perceptions into models automatically (including object recognition; speed and trajectory detection; and location-based data). Third, it can use model inference. For example, the self-driving algorithm can consist of numerous simulations of possible short-term futures for the vehicle and its environment. In this way, it can recommend options for outcomes (to stop or go).

The AI system lifecycle

In November 2018, AIGO established a subgroup to inform the OECD Recommendation of the Council on Artificial Intelligence (OECD, 2019[8]) by detailing the AI system lifecycle. This lifecycle framework does not represent a new standard for the AI lifecycle2 or propose prescriptive actions. However, it can help contextualise other international initiatives on AI principles.3

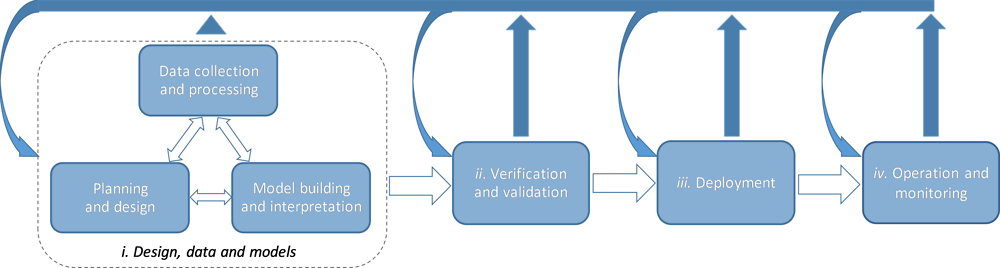

An AI system incorporates many phases of traditional software development lifecycles and system development lifecycles more generally. However, the AI system lifecycle typically involves four specific phases. The design, data and models phase is a context-dependent sequence encompassing planning and design, data collection and processing, as well as model building and interpretation. This is followed by verification and validation, deployment, and operation and monitoring (Figure 1.5. AI system lifecycle). These phases often take place in an iterative manner and are not necessarily sequential. The decision to retire an AI system from operation may occur at any point during the operation and monitoring phase.

The AI system lifecycle phases can be described as follows:

-

1. Design, data and modelling includes several activities, whose order may vary for different AI systems:

-

Planning and design of the AI system involves articulating the system’s concept and objectives, underlying assumptions, context and requirements, and potentially building a prototype.

-

Data collection and processing includes gathering and cleaning data, performing checks for completeness and quality, and documenting the metadata and characteristics of the dataset. Dataset metadata include information on how a dataset was created, its composition, its intended uses and how it has been maintained over time.

-

Model building and interpretation involves the creation or selection of models or algorithms, their calibration and/or training and interpretation.

-

-

2. Verification and validation involves executing and tuning models, with tests to assess performance across various dimensions and considerations.

-

3. Deployment into live production involves piloting, checking compatibility with legacy systems, ensuring regulatory compliance, managing organisational change and evaluating user experience.

-

4. Operation and monitoring of an AI system involves operating the AI system and continuously assessing its recommendations and impacts (both intended and unintended) in light of objectives and ethical considerations. This phase identifies problems and adjusts by reverting to other phases or, if necessary, retiring an AI system from production.

The centrality of data and of models that rely on data for their training and evaluation distinguishes the lifecycle of many AI systems from that of more general system development. Some AI systems based on ML can iterate and evolve over time.

AI research

This section reviews some technical developments with regard to AI research in academia and the private sector that are enabling the AI transition. AI, and particularly its subset called ML, is an active research area in computer science today. A broader range of academic disciplines is leveraging AI techniques for a wide variety of applications.

There is no agreed-upon classification scheme for breaking AI into research streams that is comparable, for example, to the 20 major economics research categories in the Journal of Economic Literature’s classification system. This section aims to develop an AI research taxonomy for policy makers to understand some recent AI trends and identify policy issues.

Research has historically distinguished symbolic AI from statistical AI. Symbolic AI uses logical representations to deduce a conclusion from a set of constraints. It requires that researchers build detailed and human-understandable decision structures to translate real-world complexity and help machines arrive at human-like decisions. Symbolic AI is still in widespread use, e.g. for optimisation and planning tools. Statistical AI, whereby machines induce a trend from a set of patterns, has seen increasing uptake recently. A number of applications combine symbolic and statistical approaches. For example, natural language processing (NLP) algorithms often combine statistical approaches (that build on large amounts of data) and symbolic approaches (that consider issues such as grammar rules). Combining models built on both data and human expertise is viewed as promising to help address the limitations of both approaches.

AI systems increasingly use ML. This is a set of techniques to allow machines to learn in an automated manner through patterns and inferences rather than through explicit instructions from a human. ML approaches often teach machines to reach an outcome by showing them many examples of correct outcomes. However, they can also define a set of rules and let the machine learn by trial and error. ML is usually used in building or adjusting a model, but can also be used to interpret a model’s results (Figure 1.6). ML contains numerous techniques that have been used by economists, researchers and technologists for decades. These range from linear and logistic regressions, decision trees and principle component analysis to deep neural networks.

In economics, regression models use input data to make predictions in such a way that researchers can interpret the coefficients (weights) on the input variables, often for policy reasons. With ML, people may not be able to understand the models themselves. Additionally, ML problems tend to work with many more variables than is common in economics. These variables, known as “features”, typically number in the thousands or higher. Larger data sets can range from tens of thousands to hundreds of millions of observations. At this scale, researchers rely on more sophisticated and less-understood techniques such as neural networks to make predictions. Interestingly, one core research area of ML is trying to reintroduce the type of explainability used by economists in these large-scale models (see Cluster 4 below).

The real technology behind the current wave of ML applications is a sophisticated statistical modelling technique called “neural networks”. This technique is accompanied by growing computational power and the availability of massive datasets (“big data”). Neural networks involve repeatedly interconnecting thousands or millions of simple transformations into a larger statistical machine that can learn sophisticated relationships between inputs and outputs. In other words, neural networks modify their own code to find and optimise links between inputs and outputs. Finally, deep learning is a phrase that refers to particularly large neural networks; there is no defined threshold as to when a neural net becomes “deep”.

This evolving dynamic in AI research is paired with continual advances in computational abilities, data availability and neural network design. Together, they mean the statistical approach to AI will likely continue as an important part of AI research in the short term. As a result, policy makers should focus their attention on AI developments that will likely have the largest impact over the coming years and represent some of the most difficult policy challenges. These challenges include unpacking the machines’ decisions and making the decision-making process more transparent. Policy makers should also keep in mind that most dynamic AI approaches – statistical AI, specifically “neural networks” – are not relevant for all types of problems. Other AI approaches, and coupling symbolic and statistical methods, remain important.

There is no widely agreed-upon taxonomy for AI research or for the subset of ML. The taxonomy proposed in the next subsection represents 25 AI research streams. They are organised into four broad categories and nine sub-categories, mainly focused on ML. In traditional economic research traditions, researchers may focus on a narrow research area. AI researchers commonly work across multiple clusters simultaneously to solve open research problems.

Cluster 1: ML applications

The first broad research category applies ML methods to solve various practical challenges in the economy and society. Examples of applied ML are emerging in much the same way as Internet connectivity transformed certain industries first and then swept across the entire economy. Chapter 3 provides a range of examples of AI applications emerging across OECD countries. The research streams in Table 1.1 represent the largest areas of research linked to real-world application development.

Core applied research areas that use ML include natural language processing, computer vision and robotic navigation. Each of these three research areas represents a rich and expanding research field. Research challenges can be confined to just one area or can span multiple streams. For example, researchers in the United States are combining NLP of free text mammogram and pathology notes with computer vision of mammograms to aid with breast cancer screening (Yala et al., 2017[12]).

Two research lines focus on ways to contextualise ML. Algorithmic game theory lies at the intersection of economics, game theory and computer science. It uses algorithms to analyse and optimise multi-period games. Collaborative systems are an approach to large challenges where multiple ML systems combine to tackle different parts of complex problems.

Cluster 1: Policy relevance

Several relevant policy issues are linked to AI applications. These include the future of work, the potential impact of AI, and human capital and skills development. They also include understanding in which situations AI applications may or may not be appropriate in sensitive contexts. Other relevant issues include AI’s impact on industry players and dynamics, government open data policies, regulations for robotic navigation and privacy policies that govern the collection and use of data.

Cluster 2: ML techniques

The second broad category of research focuses on the techniques and paradigms used in ML. Similar to quantitative methods research in the social sciences, this line of research builds and supplies the technical tools and approaches used in machine-learning applications (Table 1.2).

The category is dominated by neural networks (of which “deep learning” is a subcategory) and forms the basis for most ML today. ML techniques also include various paradigms for helping the system learn. Reinforcement learning trains the system in a way that mimics the way humans learn via trial and error. The algorithms are not provided explicit tasks, but rather learn by trying different options in rapid succession. Based on rewards or punishments as outcomes, they adapt accordingly. This has been referred to as relentless experimentation (Knight, 2017[13]).

Generative models, including generative adversarial networks, train a system to produce new data similar to an existing dataset. They are an exciting area of AI research because they pit two or more unsupervised neural networks against each other in a zero-sum game. In game theory terms, they function and learn as a set of rapidly repeated games. By setting the systems against each other at computationally high speeds, the systems can learn profitable strategies. This is particularly the case in structured environments with clear rules, such as the game of Go with AlphaGo Zero.

Cluster 2: Policy relevance

Several public policy issues are relevant to the development and deployment of ML technologies. These issues include supporting better training data sets; funding for academic research and basic science; policies to create “bilinguals” who can combine AI skills with other competencies; and computing education. For example, research funding from the Canadian government supported breakthroughs that led to the extraordinary success of modern neural networks (Allen, 2015[14]).

Cluster 3: Ways of improving ML/optimisations

The third broad category of research focuses on ways to improve and optimise ML tools. It breaks down research streams based on the time horizon for results (current, emerging and future) (Table 1.3). Short-term research is focusing on speeding up the deep-learning process. It does this either via better data collection or by using distributed computer systems to train the algorithm.

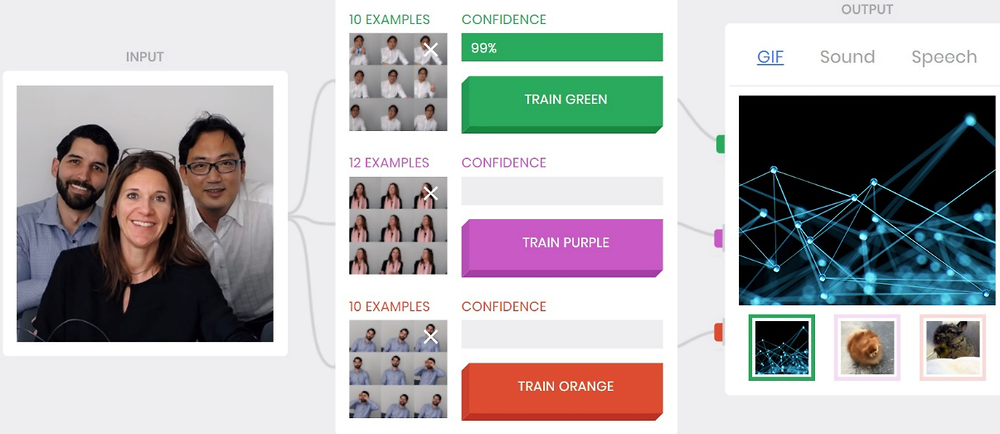

Researchers are also focused on enabling ML on low-power devices such as mobile phones and other connected devices. Significant progress has been made on this front. Projects such as Google’s Teachable Machine now offer open-source ML tools light enough to run in a browser (Box 1.2). Teachable Machine is just one example of emerging AI development tools meant to expand the reach and efficiency of ML. There are also significant advances in the development of dedicated AI chips for mobile devices.

ML research with a longer time horizon includes studying the mechanisms that allow neural networks to learn so effectively. Although neural networks have proven to be a powerful ML technique, understanding of how they operate is still limited. Understanding these processes would make it possible to engineer neural networks on a deeper level. Longer-term research is also looking at ways to train neural networks using much smaller sets of training data, sometimes referred to as “one-shot learning”. It is also generally trying to make the training process more efficient. Large models can take weeks or months to train and require hundreds of millions of training examples.

Teachable Machine is a Google experiment that allows people to train a machine to detect different scenarios using a camera built into a phone or computer. The user takes a series of pictures for three different scenarios (e.g. different facial expressions) to train the teachable machine. The machine then analyses the photos in the training data set and can use them to detect different scenarios. For example, the machine can play a sound every time the person smiles in a camera range. Teachable Machine stands out as an ML project because the neural network runs exclusively in the user’s browser without any need for outside computation or data storage (Figure 1.7).

Cluster 3: Policy relevance

The policy relevance of the third cluster includes the implications of running ML on stand-alone devices and thus of not necessarily sharing data on the cloud. It also includes the potential to reduce energy use, and the need to develop better AI tools to expand its beneficial uses.

Cluster 4: Considering the societal context

The fourth broad research category examines the context for ML from technical, legal and social perspectives. ML systems increasingly rely on algorithms to make important decisions. Therefore, it is important to understand how bias can be introduced, how bias can propagate and how to eliminate bias from outcomes. One of the most active research areas in ML is concerned with transparency and accountability of AI systems (Table 1.4). Statistical approaches to AI have led to less human-comprehensible computation in algorithmic decisions. These can have significant impacts on the lives of individuals – from bank loans to parole decisions (Angwin et al., 2016[15]). Another category of contextual ML research involves steps to ensure the safety and integrity of these systems. Researchers’ understanding of how neural networks arrive at decisions is still at an early stage. Neural networks can often be tricked using simple methods such as changing a few pixels in a picture (Ilyas et al., 2018[16]). Research in these streams seeks to defend systems against inadvertent introduction of unintended information and adversarial attacks. It also aims to verify the integrity of ML systems.

Cluster 4: Policy relevance

Several relevant policy issues are linked to the context surrounding ML. These include requirements for algorithmic accountability, combating bias, the impact of ML systems, product safety, liability and security (OECD, 2019[8]).

References

[14] Allen, K. (2015), “How a Toronto professor’s research revolutionized artificial intelligence,”, The Star, 17 April, https://www.thestar.com/news/world/2015/04/17/how-a-toronto-professors-research-revolutionized-artificial-intelligence.html.

[15] Angwin, J. et al. (2016), “Machine bias: There’s software used across the country to predict future criminals. And it’s biased against blacks”, ProPublica, https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing.

[4] Anyoha, R. (28 August 2017), “The history of artificial intelligence”, Harvard University Graduate School of Arts and Sciences blog, http://sitn.hms.harvard.edu/flash/2017/history-artificial-intelligence/.

[11] Gringsjord, S. and N. Govindarajulu (2018), Artificial Intelligence, The Stanford Encyclopedia of Philosophy Archive, https://plato.stanford.edu/archives/fall2018/entries/artificial-intelligence/.

[16] Ilyas, A. et al. (2018), Blackbox Adversarial Attacks with Limited Queries and Information, presentation at the 35th International Conference on Machine Learning, Stockholmsmässan Stockholm, 10-15 July, pp. 2142-2151.

[13] Knight, W. (2017), “5 big predictions for artificial intelligence in 2017”, MIT Technology Review, 4 January, https://www.technologyreview.com/s/603216/5-big-predictions-for-artificial-intelligence-in-2017/.

[8] OECD (2019), Recommendation of the Council on Artificial Intelligence, OECD, Paris.

[7] OECD (2017), OECD Digital Economy Outlook 2017, OECD Publishing, Paris, https://doi.org/10.1787/9789264276284-en.

[9] Russel, S. and P. Norvig (2009), Artificial Intelligence: A Modern Approach, 3rd edition, Pearson, London, http://aima.cs.berkeley.edu/.

[2] Shannon, C. (1950), “XXII. Programming a computer for playing chess”, The London, Edinburgh and Dublin Philosophical Magazine and Journal of Science, Vol. 41/314, pp. 256-275.

[6] Silver, D. et al. (2017), “Mastering the game of Go without human knowledge”, Nature, Vol. 550/7676, pp. 354-359, https://doi.org/10.1038/nature24270.

[5] Somers, J. (2013), “The man who would teach machines to think”, The Atlantic, November, https://www.theatlantic.com/magazine/archive/2013/11/the-man-who-would-teach-machines-to-think/309529/.

[1] Turing, A. (1950), “Computing machinery and intelligence”, in Parsing the Turing Test, Springer, Dordrecht, pp. 23-65.

[3] UW (2006), History of AI, University of Washington, History of Computing Course (CSEP 590A), https://courses.cs.washington.edu/courses/csep590/06au/projects/history-ai.pdf.

[10] Winston, P. (1992), Artificial Intelligence, Addison-Wesley, Reading, MA, https://courses.csail.mit.edu/6.034f/ai3/rest.pdf.

[12] Yala, A. et al. (2017), “Using machine learning to parse breast pathology reports”, Breast Cancer Research and Treatment, Vol. 161/2, pp. 201-211.

Notes

← 1. These tests were conducted using typed or relayed messages rather than voice.

← 2. Work on the System Development Lifecycle has been conducted, among others, by the National Institute of Standards. More recently, standards organisations such as the International Organization for Standardization (ISO) SC 42 have begun to explore the AI lifecycle.

← 3. The Institute for Electrical and Electronics Engineers’ Global Initiative on Ethics of Autonomous and Intelligent Systems is an example.